PI: Kalle Åström (LU). Co-PI: Cristian Sminchisescu (LU)

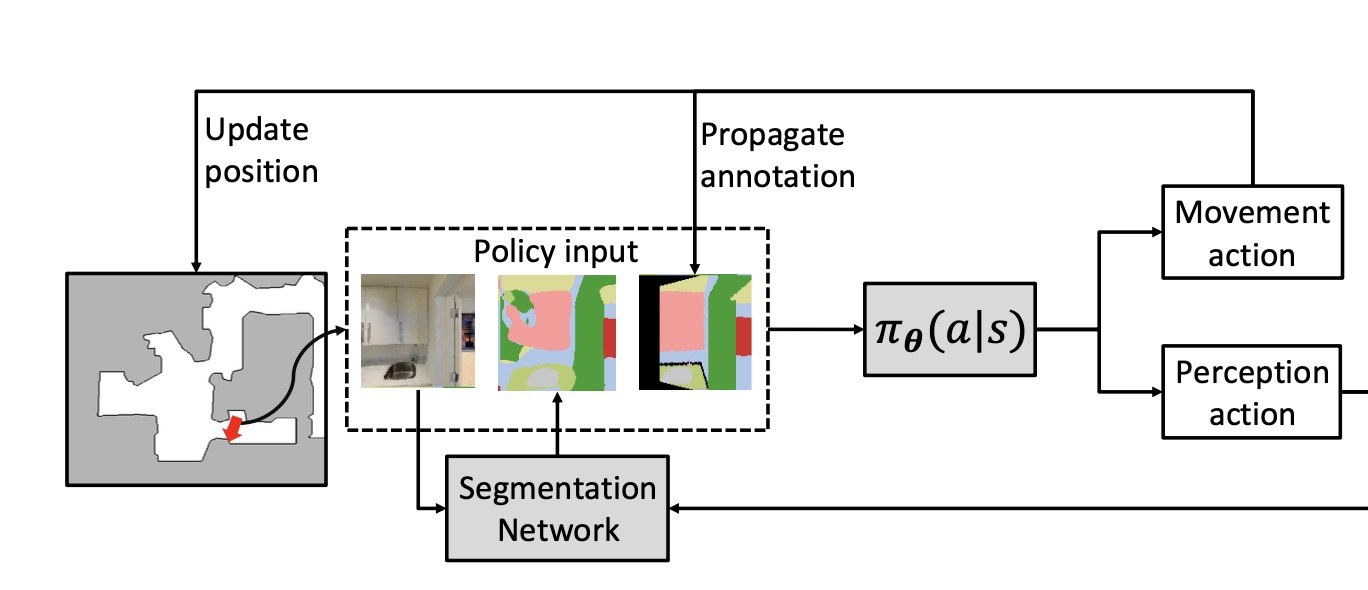

We focus on the task of embodied visual active learning, where an agent is set to explore a 3d environment with the goal of acquiring visual scene understanding by actively selecting views for which to request annotation. Today’s deep visual recognition pipelines, while accurate on some datasets or benchmarks, tend to not generalize well to certain real-world scenarios. In robotic perception there is often a need to refine the recognition capabilities for the conditions under which the robot operates (e.g. cluttered indoor environments, poor illumination). This motivates our proposal (and new task), which can be interpreted as a form of life-long learning, where an agent’s visual perception ability continually improves during its life-time. To study embodied visual active learning in a concrete setup, we plan to develop a set of methods – both learned and pre-specified, and with different levels of knowledge of the environment – which seek to explore and acquire informative annotated views on which to train an underlying segmentation network. The learned methods would use reinforcement learning with a reward function balancing the competing objectives of task accuracy (which requires exploring the environment) and controlling the amount of annotated data requested. We plan to extensively evaluate our proposed models on the photorealistic Matterport3D simulatoras well as in real scenes. To the best of our knowledge this would be the first work to explores visual active learning for embodied agents navigating in realistic 3d environments. This proposal falls under ELLIIT focus theme 1 (Autonomous Vehicles and Robots) and within the Emerging Research Thrusts, Technologies, and Challenges AI, Large-scale algorithms, machine learning, deep learning, and XAI, in particular Perception-Action Learning.

Project number: A14