PI: Kalle Åström, Lund University

co-PI: Fredrik Gustafsson, Linköping University

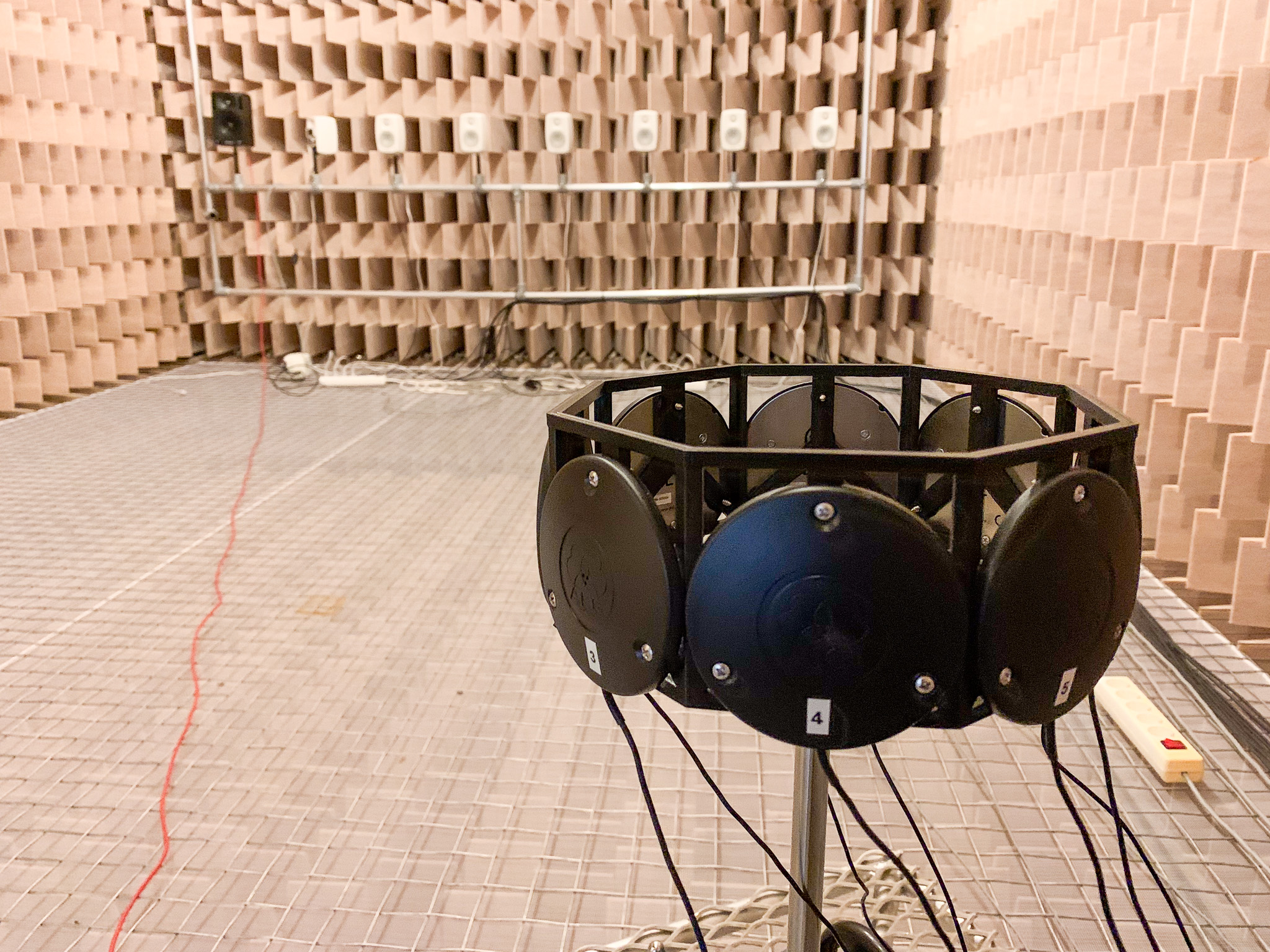

Tracking and mapping systems are central to many technologies, from wildlife monitoring and search-and-rescue to autonomous vehicles and industrial safety. They typically consist of a front-end that detects signals and a back-end that estimates positions and tracks targets. Traditionally, these two stages are treated separately: the front-end delivers measurements such as time or direction of arrival, and the back-end processes them into positions, maps and tracks. This separation simplifies design but breaks down in realistic conditions with multiple sources, noisy environments, or overlapping signals, where the key challenge becomes deciding which detection belongs to which target. Our proposal addresses this challenge by tightly integrating machine learning into the front-end of tracking systems. Instead of producing only hard measurements, the front-end will output richer features—class labels, probabilistic arrival times, and signal embeddings—that carry both spatial and temporal information. These features will be propagated into the back-end in a statistically sound way, ensuring that uncertainties are preserved all the way from raw signals to target tracks. By combining the strengths of classical estimation and modern learning, we aim to build robust end-to-end tracking systems capable of operating reliably in cluttered, low-SNR, and multi-target scenarios. Results from back-end can feed back to front-end both offline and online. The research builds directly on successful collaborations between Linköping and Lund Universities. Our joint work spans acoustic and seismic arrays for drone and wildlife tracking, inertial sensors for intrusion detection, and automotive safety applications. These studies provide both field data and established baselines, against which the proposed integrated approach will be benchmarked. The outcome will be a generation of components and tracking systems that are not only more accurate but also more adaptive, interpretable, and deployable in demanding real-world environments.

Project number: F15