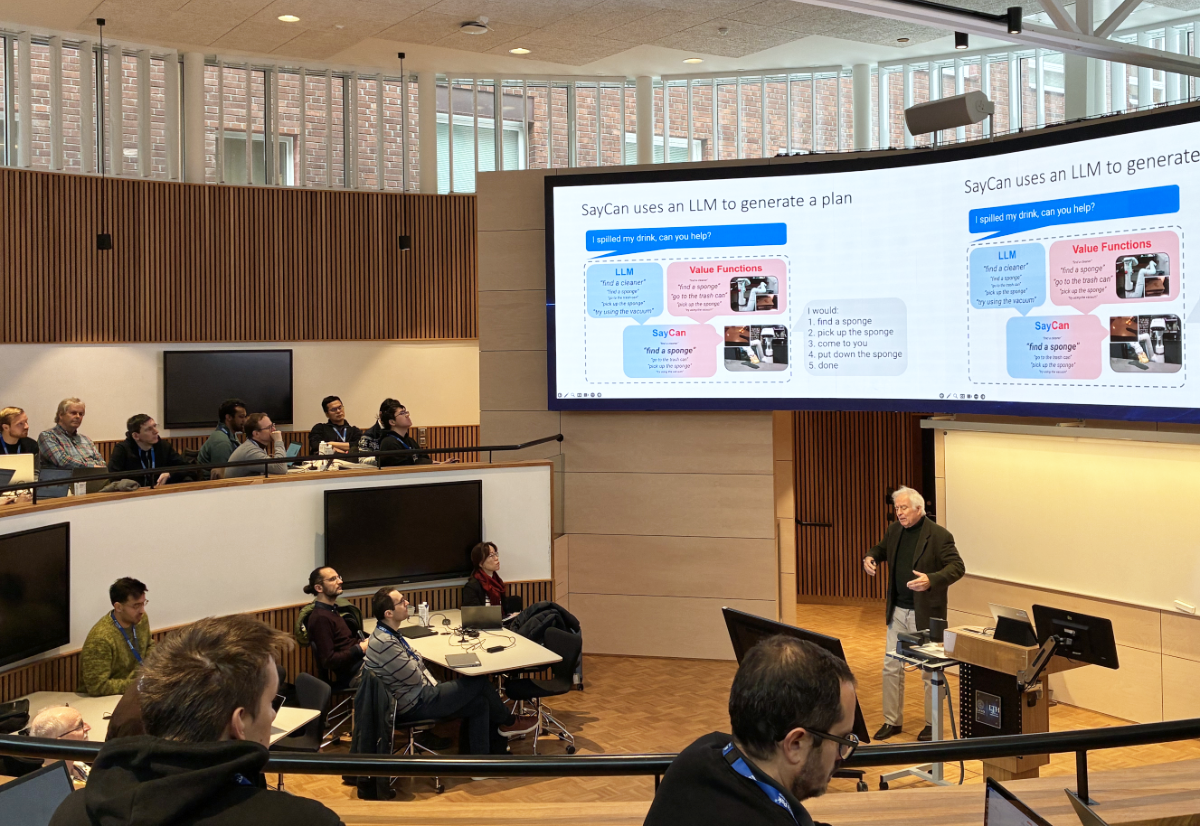

Photos from the symposium

Symposium Program

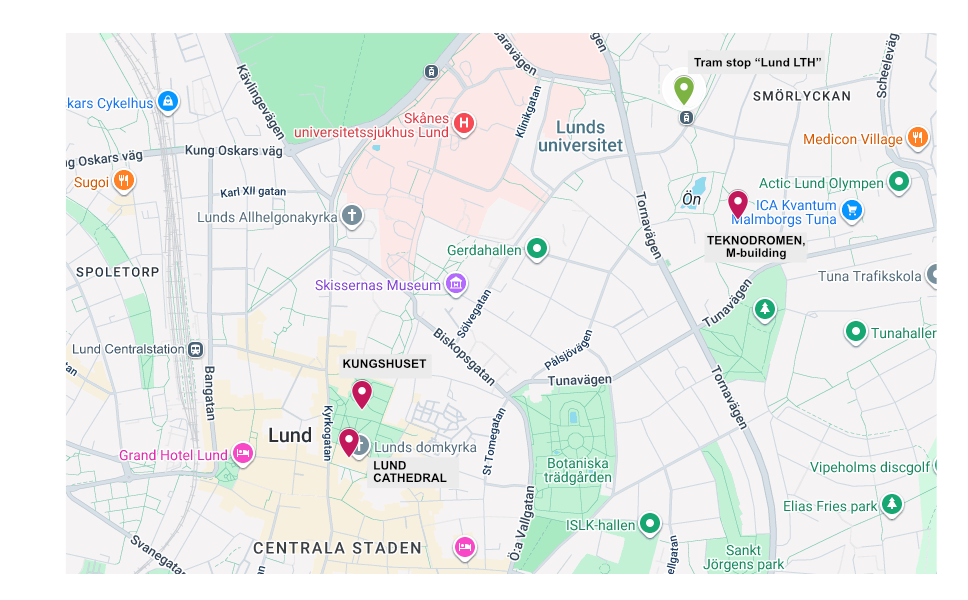

November 17, 2025

17:00 - 19:00

Carolinasalen, Kungshuset

Paradisgatan 2, 223 50 Lund

Welcome reception at Kungshuset

A welcome drink and some hors d’oeuvres will be served.

Day 1 – November 18, 2025

08:30 - 09:00

Teknodromen, M-building, LTH

Ole Römers väg 1, 22 363 Lund

Registration

09:00 - 09:30

Opening and Introduction

09:30 - 10:15

Robot Learning Through Interactions

Jens Kober

Associate Professor at Technical University of Delft (The Netherlands)

Abstract

The acquisition and self-improvement of novel motor skills is among the most important problems in robotics. Complexity arises from interactions with their environment and humans. A human teacher is always involved in the learning process, either directly (providing data) or indirectly (designing the optimization criterion), which raises the question: How to best make use of the interactions with the human teacher to render the learning process efficient and effective? I will discuss various methods we have developed in the fields of supervised learning, imitation learning, reinforcement learning, and interactive learning and illustrate those with real robot experiments ranging from fun (ball-in-a-cup) to more applied (retail environments).

Biography

Jens Kober is an associate professor at TU Delft, Netherlands. He is member of the Cognitive Robotics department (CoR) and the TU Delft Robotics Institute.

Jens is the recipient of the Robotics: Science and Systems Early Career Award 2022 and the IEEE-RAS Early Academic Career Award in Robotics and Automation 2018. His Ph.D. thesis has won the 2013 Georges Giralt PhD Award as the best Robotics PhD thesis in Europe in 2012.

Jens was an assistant professor at TU Delft (2015-2019), first at the Delft Center for Systems and Control (DCSC) and later at CoR. He worked as a postdoctoral scholar (2012-2014) jointly at the CoR-Lab, Bielefeld University, Germany and at the Honda Research Institute Europe, Germany.

From 2007-2012 he was working with Jan Peters as a master’s student and subsequently as a Ph.D. student at the Robot Learning Lab, Max-Planck Institute for Intelligent Systems, Empirical Inference Department(formerly part of the MPI for Biological Cybernetics) and Autonomous Motion Department. Jens has graduated in Spring 2012 with a Doctor of Engineering “summa cum laude” from the Intelligent Autonomous Systems Group, Technische Universität Darmstadt.

Jens holds degrees (MSc equivalent) in control engineering from University of Stuttgart and in general engineering from the École Centrale Paris (ECP).

He has been a visiting research student at the Advanced Telecommunication Research (ATR) Center, Japanand an intern at Disney Research Pittsburgh, USA.

Jens is an ELLIS Scholar and IEEE Senior Member. Jens served as co-chair of the IEEE-RAS TC Robot Learning (2016-2021), as the Virtual Conference Arrangements Chair for Robotics: Science and Systems 2020, as a Program Chair for the Conference on Robot Learning 2020, as the Finance Chair for the International Conference on Advanced Intelligent Mechatronics 2021, as associate editor for the IEEE Transactions on Robotics, and as editorial board member of the Journal of Machine Learning Research. He currently serves as a Local Arrangements Chair for Robotics: Science and Systems 2024, Secretary of the Robot Learning Foundation, as Member of the International Advisory Board – CIIRC – Czech Technical University in Prague, as board member of the ELLIS Unit Delft, as senior editor for the IEEE Robotics and Automation Letters, as associate editor for the IEEE/ASME Transactions on Mechatronics, as editor for the IEEE/RSJ International Conference on Intelligent Robots and Systems, as well as area chair/associate editor for numerous conferences. He has served as reviewer for most well-known journals and conferences in the fields of machine learning and robotics.

10:15 - 10:45

Coffee

10:45 - 11:30

Making Mobile Manipulation Real: New Learning Paradigms for Robots

Roberto Martín-Martín

Assistant Professor at University of Texas (USA)

Abstract

Most tasks people wish robots could do (fetching objects across rooms, assisting in the kitchen, tidying) require mobile manipulation, the integration of navigation and manipulation. While robots have made remarkable progress in each skill independently, bringing them together sequentially (navigate>manipulate>navigate…) or simultaneously (coordinating base and arm motion to open an oven or wipe a table) remains one of the hardest challenges in robotics. The difficulty lies not only in mastering two complex capabilities, but in coupling them safely and efficiently, over long horizons, under uncertainty, and in contact‑rich settings. These conditions often break the assumptions of standard imitation and reinforcement learning, which tend to struggle to generalize, train safely, and anticipate, learn from, and recover from errors in unstructured environments. In my lab, we are exploring new directions in robot learning that aim to bridge this gap: developing methods that allow robots to learn directly from diverse human video experiences, leveraging simulation to build structured and physically grounded action representations that scale to the real world, and to integrate long-term memory and reasoning using foundation models. Our latest work shows how these principles can enable robots to perform multi-step household activities, learn manipulation behaviors in minutes rather than days, and generalize across unseen environments and objects. Looking forward, I will discuss how unifying and extending these ideas could lay the groundwork for a new generation of adaptive, reliable, and socially integrated robots that learn continually and collaborate safely in the open world.

Biography

Roberto Martin-Martin is an Assistant Professor of Computer Science at the University of Texas in Austin. His research bridges robotics, computer vision, and machine learning, focusing on enabling robots to operate autonomously in human-centric, unstructured environments such as homes and offices. To this end, he develops advanced AI algorithms grounded in reinforcement learning, imitation learning, planning, and control, while addressing core challenges in robot perception, including pose estimation, tracking, video prediction, and scene understanding. His work spans mobile and whole-body manipulation, dexterous and contact-rich interactions, and long-horizon tasks.

He earned his Ph.D. from the Berlin Institute of Technology (TUB) under Oliver Brock, followed by postdoctoral research at the Stanford Vision and Learning Lab with Fei-Fei Li and Silvio Savarese. His contributions have been recognized with numerous honors, including the RSS Best Systems Paper Award, ICRA Best Paper Award, IROS Best Mechanism Award, Amazon Faculty Award, RSS Pioneer, AAAI Young Faculty and IJCAI Early Faculty distinctions, and as part of the winning team of the Amazon Picking Challenge. Beyond academia, he serves as Chair of the IEEE Technical Committee on Mobile Manipulation and is a co-founder of QueerInRobotics.

11:30 - 12:15

Open World Embodied Intelligence: Learning from Perception to Action in the Wild

Abhinav Valada

Professor at University of Freiburg (Germany)

Abstract

A longstanding goal in robotics is to build agents that learn from the world and assist people in everyday tasks across homes, factories, and streets. This talk outlines a path to open world autonomy that learns continuously, reasons with language and vision, and closes the loop from perception to action. I will present representations that capture objects, relations, and articulation, online learning that adapts during deployment without forgetting, and uncertainty-aware decision making that knows when to ask for clarification, seek information, or recover. I will also discuss data and model efficiency in policy learning for long-horizon tasks, including from demonstrations, teleoperation, and world models for rapid offline adaptation. I will conclude with a discussion of safety, fairness, and responsible deployment, so that learning-enabled autonomy earns trust and delivers value to society.

Biography

Abhinav Valada is a Full Professor at the University of Freiburg, where he directs the Robot Learning Lab. He is a member of the Department of Computer Science, the BrainLinks-BrainTools center, and a founding faculty of the ELLIS Unit Freiburg. Abhinav is a DFG Emmy Noether AI Fellow, Scholar of the ELLIS Society, and Chair of the IEEE Robotics and Automation Society Technical Committee on Robot Learning. He received his Ph.D. with distinction from the University of Freiburg and his M.S. in Robotics from The Robotics Institute of Carnegie Mellon University. He co-founded and served as the Director of Operations of Platypus LLC, a company developing autonomous robotic boats, and has previously worked at the National Robotics Engineering Center and the Field Robotics Center of Carnegie Mellon University. Abhinav’s research lies at the intersection of robotics, machine learning, and computer vision with a focus on tackling fundamental robot perception, state estimation, and planning problems to enable robots to operate reliably in complex and diverse domains. For his research, he received the IEEE RAS Early Career Award in Robotics and Automation, NVIDIA Research Award, AutoSens Most Novel Research Award, among others. Many aspects of his research have been prominently featured in wider media such as the Discovery Channel, NBC News, Business Times and The Economic Times.

12:15 - 13:30

Lunch

13:30 - 14:15

Scaling Autonomy: Towards Embodied AI for Real-world Autonomous Mobile Robots

Olov Andersson

Assistant Professor at KTH (Sweden)

Abstract

I will present our efforts toward Embodied AI for autonomous mobile robots. Guided by our experiences with real-world autonomy in the DARPA SubT Challenge, we consider key scaling challenges of classical autonomy and show examples where advances in AI and foundation models hold great potential to improve autonomy and reduce the cost of deploying robots in uncontrolled real-world environments.

Biography

Olov Andersson is an Assistant Professor at KTH Royal Institute of Technology, where he since 2024 leads a new research group in Embodied AI for Autonomous Robots. He received his PhD degree in Computer Science from Linköping University in 2020. Between 2020-2024 he was a postdoc and senior researcher at ETH Zurich in the Autonomous Systems Lab under Prof. Roland Siegwart. There he co-led their part of winning team CERBERUS in the DARPA Subterranean (SubT) Challenge Finals. Olov is a WASP Fellow and serves as Associate Editor of Robotics Automation Letters (RA-L).

14:15 - 15:00

Safe and Robust Real-World Robot Learning

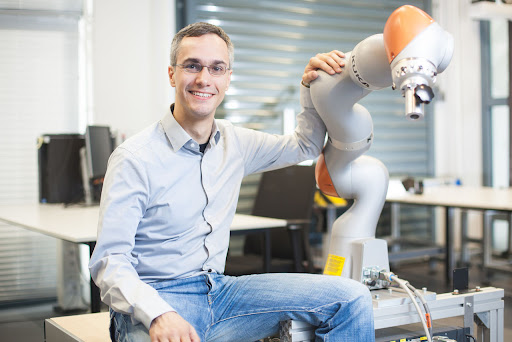

Davide Tateo

Senior Lecturer at Lund University (Sweden)

Abstract

Nowadays, it is clear that we need to incorporate learning methods to develop the robots of the future. However, learning is not enough to empower the robot to deal with challenging real-world scenarios: we need to enable the robots to adapt dynamically to the environment with online learning. To allow online learning in real robotic systems, we need to solve three key challenges: efficient learning, robustness to disturbances, and satisfaction of safety constraints. In this talk, we will discuss these challenges and show how to deploy learning methods in complex contact-rich dynamic tasks such as the robot Air Hockey setting.

Biography

Davide Tateo has been a Senior lecturer in the Robotics and Semantic Systems Group at Lund University since October 1st, 2025. Davide received his Ph.D. in Information Technology from Politecnico di Milano (Milan, Italy) in February 2019. Afterwards, he joined the Intelligent Autonomous System Group of Prof. Jan Peters.

During his stay at TU Darmstadt, as a research group leader, Davide led the Safe and Reliable Robot Learning Research Group. The main goal of his research group was to develop learning algorithms that can be deployed on real systems. To achieve this objective, the group focuses on fundamental properties of the learning algorithm, such as acting under (safety) constraints, robustness, and learning efficiency.

15:00 - 15:30

Coffee

15:30 - 16:15

Learning-Based Perception and Navigation for Autonomous Robotic Missions

Christoforos Kanellakis

Associate Professor at Luleå University of Technology (Sweden)

Abstract

Autonomous robotic systems are increasingly deployed in complex, dynamic environments where traditional rule-based approaches fall short. This presentation explores how learning-based methods can enhance robotic perception and navigation capabilities. By leveraging semantic scene understanding, multi-modal sensor integration, and adaptive planning, robots can construct meaningful representations of their surroundings and make informed decisions. The talk synthesizes recent contributions from our lab across belief modeling, traversability estimation, and semantic-aware compression for multi-agent systems, demonstrating how these approaches support robust operation in mission-critical scenarios.

Biography

Christoforos Kanellakis received his Ph.D. in 2020 from the Control Engineering Group at Luleå University of Technology (LTU), Sweden, and his Diploma in Electrical and Computer Engineering from the University of Patras (UPAT), Greece, in 2015. He is currently an Associate Professor with the Robotics and Artificial Intelligence Group at LTU.

In the past he has been affiliated with NASA’s Jet Propulsion Laboratory (JPL), where he conducted research under the mentorship of Dr. Ali Agha and Prof. Joel Burdick as part of a research visit within the DARPA Subterranean (SubT) Challenge. His research interests encompass scene representation and understanding, and multi-modal sensing for autonomous robotic systems. He focuses on guidance and navigation in complex environments, with contributions in multi-agent coordination, vision-aided aerial manipulation, and field-hardened autonomy for mission-critical applications such as subterranean exploration, industrial inspection, and environmental monitoring.

16:15 - 17:00

Panel discussion

Day 2 – November 19, 2025

08:45 - 09:30

Structural Understanding – The Grand Challenge of Robot Learning

Justus Piater

Professor at University of Innsbruck (Austria)

Abstract

AI has made great progress in recent years, and the sophistication of robots has been rising with costs falling. Yet, the capabilities of AI-enabled robots are not keeping pace. I argue that this is due to a lack of structural understanding by current AI systems. I will discuss several lines of research in my lab that seek to enable robots to generalize better and learn faster thanks to explicit notions of structure.

Biography

Justus Piater is a professor of computer science at the University of Innsbruck, Austria where he leads the Intelligent and Interactive Systems group. He earned his Ph.D. degree at the University of Massachusetts Amherst, USA, and was a visiting researcher at the Max Planck Institutes for Biological Cybernetics and for Intelligent Systems in Tübingen, Germany. His research interests focus on learning and inference in sensorimotor systems, published in more than 200 papers several of which have received best-paper awards. He served as Dean of the Faculty of Mathematics, Computer Science and Physics and acts as the founding director of the Digital Science Center at the University of Innsbruck, and is a Fellow of the European Laboratory for Learning and Intelligent Systems (ELLIS).

09:30 - 10:15

From Passive Learner to Pro-Active and Inter-Active Learner

Dongheui Lee

Professor at TU Vienna (Austria)

Abstract

Autonomous motor skill learning and control are central challenges in the development of intelligent robotic systems. Imitation learning offers an efficient approach, enabling robots to acquire new skills from human guidance while reducing the time and cost of manual programming. Traditional approaches to robot learning from demonstration tend to render the robot a passive learner, confined largely to motion planning derived from the current observations. To progress beyond the traditional paradigm of imitation learning, it is essential to develop methods that allow robots to continuously learn new skills and to refine previously learned ones, if necessary, particularly in uncertain or dynamic environments. This may require the ability to reason about the robot’s own actions, or to extend its knowledge through proactive and interactive engagement with humans.

Biography

Dongheui Lee (이동희) is Full Professor of Autonomous Systems at Institute of Computer Technology, Faculty of Electrical Engineering and Information Technology, TU Wien. She has been also leading a Human-centered assistive robotics group at the German Aerospace Center (DLR), since 2017. Prior, she was Associate Professor of Human-centered Assistive Robotics at the TUM Department of Electrical and Computer Engineering, Assistant Professor of Dynamic Human Robot Interaction at TUM, Project Assistant Professor at the University of Tokyo (2007-2009), and a research scientist at the Korea Institute of Science and Technology (KIST) (2001-2004). She obtained a PhD degree from the department of Mechano-Informatics, University of Tokyo, Japan in 2007. She was awarded a Carl von Linde Fellowship at the TUM Institute for Advanced Study (2011) and a Helmholtz professorship prize (2015). She has served as Senior Editor and a founding member of IEEE Robotics and Automation Letters (RA-L), Associate Editor for the IEEE Transactions on Robotics, and an elected IEEE RAS AdCom member, opens an external URL in a new window. Her research interests include human motion understanding, human robot interaction, machine learning in robotics, and assistive robotics.

10:15 - 10:45

Coffee

10:45 - 11:30

Robot learning on the edge: Online learning in hardware

Thomas Berrueta

Caltech (USA)

Abstract

Biography

Thomas Berrueta is an interdisciplinary roboticist and Postdoctoral Scholar at the California Institute of Technology’s Computing and Mathematical Sciences Department. Since joining Prof. Soon-Jo Chung’s Autonomous Robotics and Control Laboratory, Thomas has led algorithm design and development for high-performance robotic hardware platforms, such as spacecraft and autonomous race cars traveling at over 250kph. He received a Ph.D. in Mechanical Engineering from Northwestern University under the supervision of Prof. Todd Murphey, where he was awarded the Presidential Fellowship—the highest honor conferred to a graduate student by the university—and named a Microsoft Future Leader in Robotics and AI. Moreover, his work has been featured in coverage from news outlets like Ars Technica, Popular Mechanics, Scientific American, Gizmodo, and Science. Thomas Berrueta’s research explores the role of embodiment in robot learning and control, seeking to make autonomous systems more adaptable, robust, and life-like by integrating physical self-awareness with techniques from reinforcement learning, stochastic processes, and optimal control.

11:30 - 12:15

The Puzzle of Endowing Robots with Cloth Manipulation Skills

Carme Torras

Research Professor at UPC Technical University of Catalunia

Abstract

Manipulating textile objects in a versatile way remains a challenging problem in robotics, triggered by applications in assistive and industrial domains. The vast number of degrees of freedom involved in deformations renders the methods developed for rigid objects unfeasible. Two main frameworks have emerged to handle such complexity: physical model-based approaches and data-driven learning approaches. We are trying to combine the best of both worlds by assembling a puzzle with the different components required to endow robots with both quasi-static and dynamic cloth manipulation skills. Such components are new methods for physical cloth modelling and simulation, compact cloth-state representation, grasping and manipulation taxonomies, skill data acquisition through virtual reality, learning by demonstration and reinforcement learning, model-predictive control, as well as gripper designs with specific cloth-handling functionalities. Robot prototypes integrating some of the above components will be shown in the talk. The work of a highly interdisciplinary team is acknowledged, including mathematicians, computer scientists, mechanical, industrial, telecommunication, and software engineers, as well as a philosopher dealing with the ethical deployment of assistive robots.

Biography

Carme Torras is Research Professor at the Spanish Scientific Research Council (CSIC). She received M.Sc. degrees in Mathematics and Computer Science from the Universitat de Barcelona and the University of Massachusetts, respectively, and a Ph.D. degree in Computer Science from the Technical University of Catalonia (UPC). She is IEEE Fellow, EurAI Fellow, and she was awarded an ERC Advanced Grant in 2016 and the Spanish National Research Award in Mathematics and Information Technologies in 2020.

12:15 - 13:30

Lunch

13:30 - 14:15

Are We Ready for Snow-tonomous Driving? Toward Robust Automated Driving in Extreme Weather

Eren Aksoy

Associate Professor at Lund University (Sweden)

Abstract

Over the past decade, significant investments have been made globally into the development of autonomous vehicle technologies. Industry and academia have dedicated a significant amount of financial, scientific, and infrastructural resources to collect extensive data over millions of kilometers on public roads and to train advanced AI-based automated driving algorithms. However, not every kilometer driven is equal! Most automated driving systems have, to date, been primarily trained and tested in clear or moderately degraded conditions. This strong bias poses a critical challenge for ensuring robust performance in harsh weather scenarios such as dense fog, heavy rain, or snowfall, which severely impact sensor reliability and the performance of perception and control algorithms.

In this talk, I will introduce the EU-funded Horizon Europe Innovation Action project ROADVIEW, which aims to develop robust and cost-efficient perception and decision-making systems for automated driving in extreme weather conditions. After a brief overview of the project, I will share our latest data-driven weather-aware perception and control solutions evaluated under challenging weather conditions. I will also introduce our newly collected multimodal multitask dataset captured under active snowfall. Finally, I will conclude by presenting experimental findings revealing the limitations of current state-of-the-art approaches in semantic segmentation, object detection, and 3D point cloud filtering, particularly under snowy and adverse weather conditions, highlighting the critical gaps that must be addressed to achieve truly robust snow-tonomous driving systems.

Biography

Eren Aksoy is an Associate Professor (Docent) at Lund University, Sweden. He coordinates the Horizon Europe project ROADVIEW, which focuses on robust automated driving in extreme weather. He obtained his Ph.D. in computer science from the University of Göttingen, Germany, in 2012. During his Ph.D. studies, he invented the concept of Semantic Event Chains to encode, learn, and execute human manipulation actions in the context of robot imitation learning. His framework has been employed as a technical robot perception-action interface in several EU projects such as IntellACT, Xperience, and ACAT. Prior to relocating to Sweden, he spent three years as a postdoctoral research fellow in the H2T group led by Prof. Dr. Tamim Asfour at the Karlsruhe Institute of Technology. Recently, as a visiting scholar, he conducted research on AI-based perception algorithms for autonomous vehicles at Volvo GTT and Zenseact AB in Sweden. Dr. Aksoy holds editorial appointments as Editor and Associate Editor for top-tier robotics journals and conferences, including RA-L, the Robot Learning Journal, ICRA, IROS, Humanoids, and ITSC. His research interests include scene semantics, computer vision, AI, and cognitive robotics. He actively works on creating semantic representations of visual experiences to improve the environment and action understanding of autonomous systems, such as robots and unmanned vehicles.

14:15 - 15:00

Sample-efficient Data-driven Manipulation with Equivariant Models and Fast Training

Renaud Detry

Associate Professor at KU Leuven (Belgium)

Abstract

I will first discuss a novel approach to imitation learning that enables the training of multi-task robot diffusion policies using just a few hours on an inexpensive gaming GPU. Compared to the state of the art, our method reduces training time by 95% and memory usage by 93%, while maintaining 95% of the original performance. Our approach exploits a key distinction between robot diffusion and the image diffusion techniques that inspired it. I will explain how the role of image data is reversed between image diffusion and robot diffusion, and how we leverage this asymmetry to reduce training costs. Second, I will present methods to enhance robot manipulation models through equivariance—designing models that retain their properties under spatial transformations of input data. We introduce a new grasping model that is equivariant to rotations within the table plane, resulting in significant gains in sample efficiency. Our model is based on a “tri-plane” representation of 3D data, created by projecting 3D features onto the three canonical planes: XY, XZ, and YZ. We propose a new tri-plane design in which features on the horizontal plane are equivariant to 90° rotations around the Z-axis, while the sum of corresponding features in the other two planes remains invariant to the reflections induced by such rotations. Additionally, we develop a new equivariant grasp planner that models gripper orientations with an equivariant generative model based on flow matching.

Biography

Renaud Detry is Associate Professor of Robot Learning at KU Leuven in Belgium, with a dual appointment in the Electrical and Mechanical Engineering groups (PSI and RAM). Previously, he served as group lead for the Perception Systems group at NASA JPL, Pasadena, CA, and held an Assistant Professorship at UCLouvain, Belgium. His research interests lie in robot learning and computer vision, particularly concerning robot manipulation and space robotics. While at JPL, Renaud led machine vision efforts for the surface mission of the NASA/ESA Mars Sample Return campaign. He is Associate Editor for IEEE Transactions on Robotics (T-RO), a member of the ELLIS Society, a member of the steering committee of Leuven.AI, and a technical advisor for Opal-AI.com.

15.00 - 15:30

Coffee

15:30 - 16:15

The Atomic Skill Approach to Robotic Dexterity

Haozhi Qi

Research Scientist at Meta FAIR and Incoming Assistant Professor at University of Chicago (USA)

Abstract

Human hands are essential for sensing and interacting with the physical world, enabling us to manipulate objects with ease. Replicating this level of dexterity in robots remains a longstanding challenge, and a key milestone toward general-purpose robotics. While modern AI has made remarkable advances in vision and language, robotic dexterity remains unsolved due to the complexity of high-dimensional control, limited real-world data, and the need for rich multisensory feedback. In this talk, I will present the atomic skill approach to dexterity: first learning reusable, generalizable low-level manipulation skills, then composing them through high-level policies to achieve complex behaviors. I will illustrate this framework with examples including in-hand reorientation, thread fastening, and coordinated humanoid manipulation.

Biography

Haozhi Qi is a research scientist at Meta FAIR and an incoming assistant professor in the Department of Computer Science at the University of Chicago. He received his Ph.D. in Electrical Engineering and Computer Sciences from UC Berkeley, working with Prof. Yi Ma and Prof. Jitendra Malik.

His research lies at the intersection of robot learning, computer vision, and tactile sensing, with the goal of developing physically intelligent and dexterous robots for unstructured environments. His work on in-hand perception was featured as the cover article in Science Robotics. His honors include UC Berkeley’s Lotfi A. Zadeh Prize, the Outstanding Demo Award at the NeurIPS Robot Learning Workshop, and the EECS Evergreen Award for Undergraduate Researcher Mentoring. More information is available at https://haozhi.io.

16:15 - 17:00

Panel discussion

19:00

Maryhill Estate

Ålabodsvägen 193, 261 63 Glumslöv

Symposium dinner

Bus transport to the dinner venue Maryhill Estate departs from Lund Cathedral at 18:00

Day 3 – November 20, 2025

08:45 - 09:30

Frugal Learning of Manipulation Skills

Sylvain Calinon

Senior Research Scientist at Idiap Research Institute EPFL (Switzerland)

Abstract

Despite significant advances in AI, robots still struggle with tasks involving physical interaction. Robots can easily beat humans at board games such as Chess or Go but struggle to skillfully move the game pieces by themselves (the part of the task that humans subconsciously succeed in). Learning manipulation skills is both hard and fascinating because the movements and behaviors to acquire are tightly connected to our physical world and to embodied forms of intelligence.

I will present an overview of representations and learning approaches to help robots acquire manipulation skills by imitation and self-refinement. I will present the advantages of targeting a frugal learning approach, where the term “frugality” has two goals: 1) learning manipulation skills from only few demonstrations or exploration trials; and 2) learning only the components of the skill that really need to be learned.

Toward this goal, I will emphasize the roles of geometry, manifolds, implicit shape representations and distance fields as inductive biases to facilitate human-guided manipulation skill acquisition. I will also show how ergodic control can provide a mathematical framework to generate exploration and coverage movement behaviors, which can be exploited by robots as a way to cope with uncertainty in sensing, proprioception and motor control.

Biography

Sylvain Calinon is Senior Research Scientist at the Idiap Research Institute, with research interests covering robot learning, optimal control, geometrical approaches, and human-robot collaboration. He is lecturer at the Ecole Polytechnique Fédérale de Lausanne (EPFL).

His work focuses on human-centered robotics applications in which the robots can acquire new skills from only few demonstrations and interactions. It requires the development of models that can exploit the structure and geometry of the acquired data in an efficient way, the development of optimal control techniques that can exploit the learned task variations and coordination patterns, and the development of intuitive interfaces to acquire meaningful demonstrations.

The developed approaches can be applied to a wide range of manipulation skills, with robots that are either close to us (assistive and industrial robots), parts of us (prosthetics and exoskeletons), or far away from us (teleoperation). Sylvain Calinon’s research is supported by the European Commission, by the Swiss National Science Foundation, by the State Secretariat for Education, Research and Innovation, and by the Swiss Innovation Agency.

09:30 - 10:15

Perceiving, Understanding, and Interacting through Touch

Roberto Calandra

Professor at Technical University of Dresden (Germany)

Abstract

Touch is a crucial sensor modality in both humans and robots. Recent advances in tactile sensing hardware have resulted — for the first time — in the availability of mass-produced, high-resolution, inexpensive, and reliable tactile sensors. In this talk, I will argue for the importance of creating a new computational field of “Touch processing” dedicated to the processing and understanding of touch through the use of Artificial Intelligence. This new field will present significant challenges both in terms of research and engineering, but also significant opportunities in digitizing a new sensing modality. To conclude, I will present some applications of touch in robotics and discuss other future applications.

Biography

Roberto Calandra is a Full (W3) Professor at the Technische Universität Dresden where he leads the Learning, Adaptive Systems and Robotics (LASR) lab. Previously, he founded at Meta AI (formerly Facebook AI Research) the Robotic Lab in Menlo Park. Prior to that, he was a Postdoctoral Scholar at the University of California, Berkeley (US) in the Berkeley Artificial Intelligence Research (BAIR) Lab. His education includes a Ph.D. from TU Darmstadt (Germany), a M.Sc. in Machine Learning and Data Mining from the Aalto university (Finland), and a B.Sc. in Computer Science from the Università degli Studi di Palermo (Italy).

His scientific interests are broadly at the conjunction of Robotics and Machine Learning, with the goal of making robots more intelligent and useful in the real world. Among his contributions is the design and commercialization of DIGIT — the first commercial high-resolution compact tactile sensor, which is currently the most widely used tactile sensor in robotics. Roberto served as Program Chair for AISTATS 2020, as Guest Editor for the JMLR Special Issue on Bayesian Optimization, and has previously co-organized over 16 international workshops (including at NeurIPS, ICML, ICLR, ICRA, IROS, RSS). In 2024, he received the IEEE Early Academic Career Award in Robotics and Automation

10:15 - 10:45

Coffee

10:45 - 11:30

How Can Robots Generalize Actions?

Peter Gärdenfors

Professor at Lund University (Sweden)

Abstract

Even after teleoperated instructions, robots have problem generalizing their actions to new situations. I begin with a diagnosis of the problem. Based on what is known about how humans understand actions and affordances, I suggest that actions should be represented as force patterns. This will generate an action space. Such a space can be used to generate new motor patterns in new situations. Learning the structure of the action space can be achieved by exploratory activities by the robot, similar to how children learn.

Biography

Professor Emeritus Peter Gärdenfors has held various positions at Department of Philosophy, Lund University 1970-1980. He held a vacancy as Associate Professor in Philosophy of Science at Umeå University parts of 1975-1977, and Associate Professor at the Department of Philosophy, Lund University 1980-1988. Professor Gärdenfors held a research chair in Cognitive Science at the Swedish Council for Research in Humanities and Social Sciences, 1988-1994 and Professor of Cognitive Science at Lund University 1994-2016. He has been Senior professor in Cognitive Science since 2016, Adjunct Professor at University of Technology Sydney 2013-2019, and Senior Research Associate with the Palaeo-Research Institute at the University of Johannesburg since 2019.

11:30 - 12:15

Towards Learning with Stability Guarantees and Robustness to Irreversible Events

Pietro Falco

Associate Professor at University of Padua (Italy)

Abstract

As robots increasingly operate in dynamic, human-centered environments, their mistakes can lead to lasting and irreversible consequences—such as damaged objects, loss of trust, or even harm to people. In these contexts, the inherent trial-and-error nature of reinforcement learning (RL) raises a fundamental question: how can robots learn more safely and predictably while avoiding actions that compromise the learning process or cause irreversible outcomes?

This talk explores possible methods that incorporate stability guarantees and robustness against irreversible events into the RL framework. Through applications in manipulation and industrial robotics, I will illustrate how these principles can potentially contribute to bridging the gap between theoretical RL and real-world deployment. Ultimately, this work aims to enable learning-based robots that are not only adaptive and capable but also more reliable in real environments.

Biography

Pietro Falco received his Ph.D. in Electronic Engineering in 2012 and subsequently held a Postdoctoral position at the Second University of Naples until February 2015. From December 2010 to July 2011, he was a Visiting Scholar at the Karlsruhe Institute of Technology (KIT), under the supervision of Prof. Rüdiger Dillmann. In 2011, he co-founded Aeromechs S.r.l., a company still active in the field of intelligent energy management systems for aeronautics. In 2015 he moved to Munich where he received a TUM Foundation Fellowship at the Technical University of Munich and later a Marie Curie Individual Fellowship for Experienced Resaerchers. In 2018, Pietro joined ABB Corporate Research in Västerås, Sweden, as a Senior Scientist and Project Manager, where he led diverse internal and European research projects. In 2024, Pietro returned to Italy at the University of Padua, where he currently serves as an Associate Professor.

His research interests span machine learning for robotics, human manipulation observation, robotic mobile manipulation, and large language models for robotics.

12:15 - 13:30

Lunch

13:30 - 14:15

Learning in Perception and Action Loop for Efficient Manipulation with Uncertainty

Jing Xiao

Professor at Worcester Polytechnic Institute (USA)

Abstract

Many robotic applications require a robot to manipulate objects in the presence of uncertainty or unknowns. The robot must rely on sensing and perception to guide its actions and obtain feedback about the outcomes to handle errors due to uncertainties. In this talk, I will discuss closing the perception and action loop in autonomous complex and contact-rich robotic manipulation, such as complex assembly and deformable linear object manipulation, and how learning is incorporated to play a key role in efficient perception of uncertain or unknown task states to facilitate general robot manipulation capabilities.

Biography

Jing Xiao is the Deans’ Excellence Professor, William B. Smith Distinguished Fellow in Robotics Engineering, Professor and Head of the Robotics Engineering Department, Worcester Polytechnic Institute (WPI). She received her PhD in Computer, Information, and Control Engineering from the University of Michigan. She led the Robotics Engineering Program to become the first full-fledged Robotics Engineering Department in the U.S. in July 2020, offering the most comprehensive degree programs from bachelor’s to PhD’s in robotics engineering. She is the Site Director of NSF Industry/University Cooperative Research Center on Robots and Sensors for Human Well-being (ROSE-HUB) at WPI. Before joining WPI in 2018, she was a professor and served as the Associate Dean for Research in the College of Computing, the University of North Carolina at Charlotte, where she was also the recipient of the Outstanding Faculty Research Award in 2015. She also served as the U.S. National Science Foundation Program Director for the Robotics and Human Augmentation Program. Her research spans robotics, haptics, multi-modal perception, and artificial intelligence, with two highly related themes: one is real-time adaptiveness of robots to uncertainty and uncertain changes in an environment based on perception, and the other is robot manipulation in the presence of unknowns and uncertainties. Jing Xiao is an Editor of the IEEE Transactions on Robotics. She is an IEEE Fellow. She is a recipient of the 2022 IEEE Robotics and Automation Society George Saridis Leadership Award in Robotics and Automation. More on https://www.wpi.edu/people/faculty/jxiao2

14:15 - 15:00

To Know or To See: Few- and Zero-shot Object Perception for Robotic Manipulation

Rudolph Triebel

Professor at Robotics Institute of DLR and KIT (Germany)

Abstract

To manipulate objects, be it for pick-and-place tasks or for functional grasping, robots first need to detect the objects, find their 3D-pose, and determine an appropriate pose for the gripper. Despite recent progress in these fields, major challenges for the application in realistic robotic contexts still remain. These include the ability to quickly adapt to new objects that are not contained in the training data, the representation of multi-modal predictive distributions for object states, and an effective way to incorporate prior knowledge, e.g. from the robot’s kinematics. In this talk, I will show recent examples that address these challenges, such as diffusion-based zero-shot object segmentation, few-shot object perception for pose estimation and reconstruction, and a probabilistic fusion method leveraging vision and robot kinematics.

Biography

Prof. Triebel received his PhD in 2007 from the University of Freiburg in Germany with a thesis on “Three-dimensional Perception for Mobile Robots”. From 2007 to 2011, he was a postdoctoral researcher at ETH Zurich, where he worked on machine learning algorithms for robot perception within several EU-funded projects. Then, from 2011 to 2013 he worked in the Mobile Robotics Group at the University of Oxford, where he developed unsupervised and online learning techniques for detection and classification applications in mobile robotics and autonomous driving. From 2013 to 2023, he worked as a lecturer at TU Munich, where he taught master level courses in the area of Machine Learning for Computer Vision. In 2015, he was appointed as the leader of the Department of Perception and Cognition at the Robotics Institute of DLR, and in 2023 he was also appointed as a university professor at Karlsruhe Institute of Technology (KIT) in “Intelligent Robot Perception”.

15.00 - 15:30

Coffee

15:30 - 16:30

Panel Discussion and Closing Remarks

Map